Introduction #

Among the four types of Network Address Translation(NAT) supported in RELIANOID ADC, We have Source NAT (NAT), Dynamic NAT(DNAT), Direct Server Return (DSR), and stateless DNAT. In this article, we will delve into the intricacies of Direct Server Return (DSR), exploring its architecture, benefits, and potential hurdles. We will also setup DSR in Relianoid ADC.

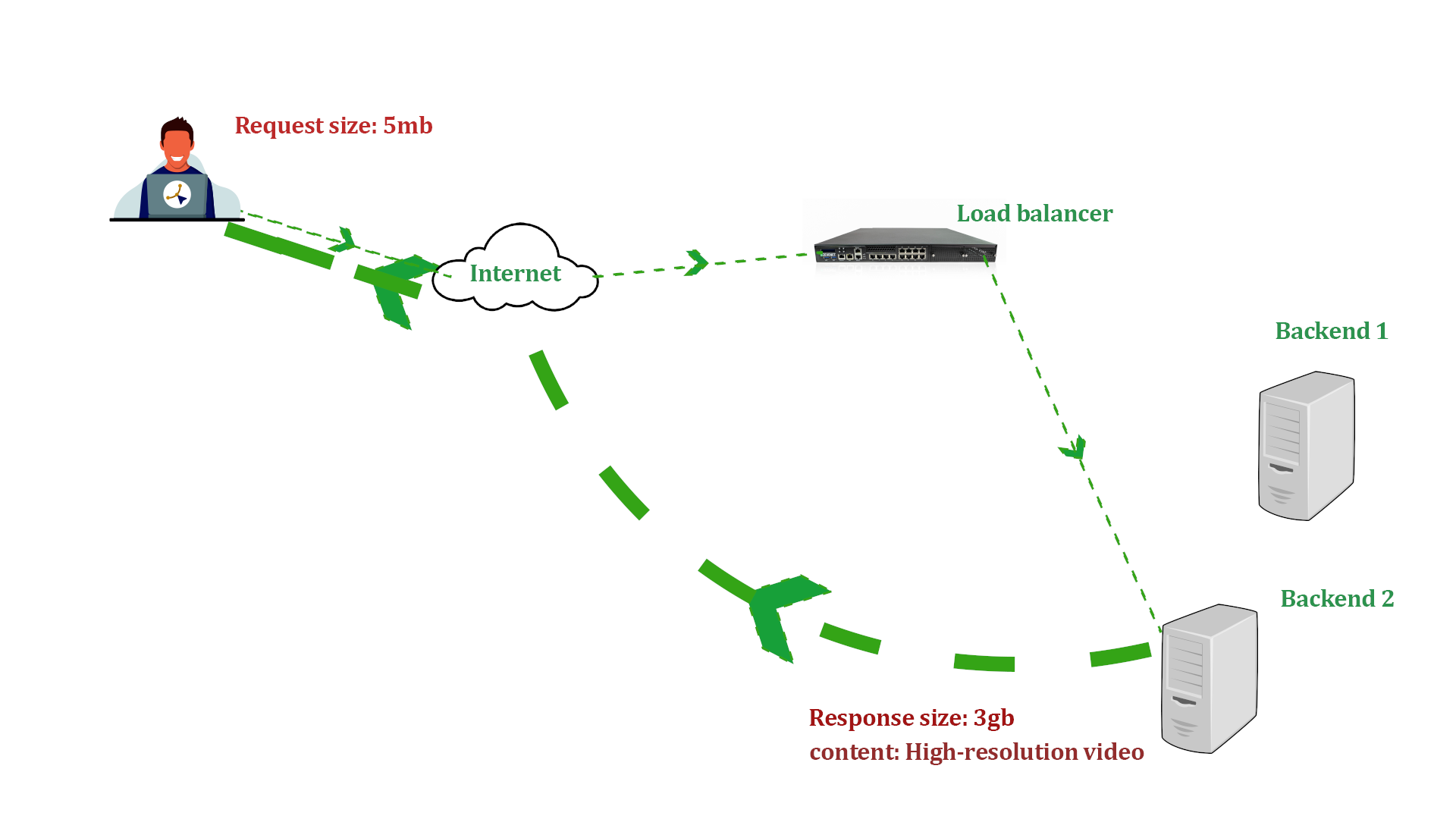

DSR is when backend application servers respond directly to the client’s requests upon receiving and processing a request. But, how does this work? Here is how the communication flows between the servers and web clients..

DSR Communication Flow #

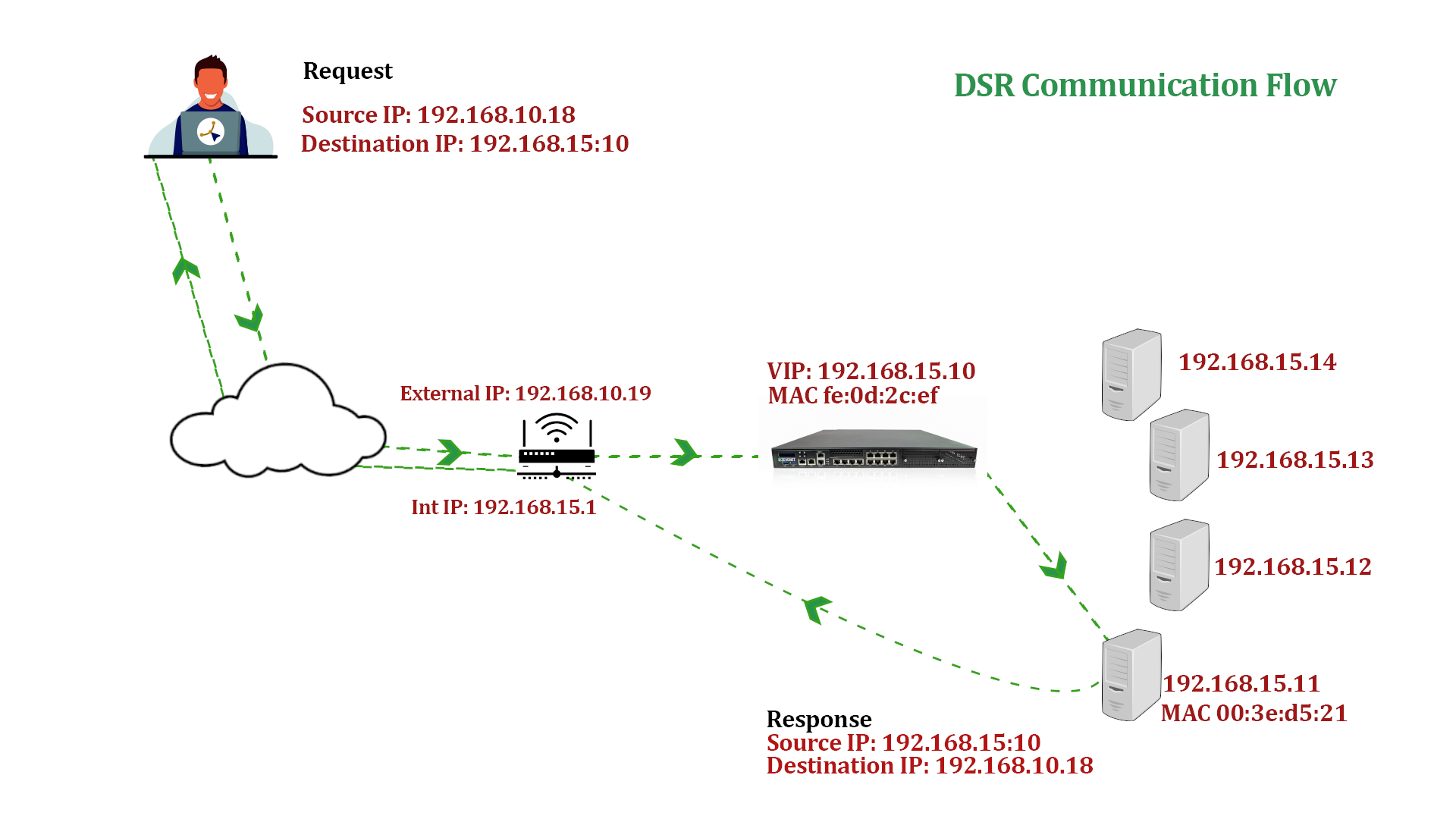

Client Request: A client initiates a request, such as accessing streamable media files or sending data to application servers via Relianoid ADC.

Load balancer interaction: Upon receiving the request, Relianoid does not modify the contents of the request except the Destination MAC address. The MAC address modified to is that of the backend server to process the request. The load balancer then forwards the request to the appropriate backend server based on the load balancing algorithm set.

Backend Application Server: Upon receiving the request, the backend server processes the request and generates a response.

Direct Response: The Backend server then sends the response directly to the client device, completing the communication loop.

Important Note

- Relianoid typically responds to ARP requests on behalf of the backend servers to preserve the original client-server communication. Therefore, proper ARP configurations are crucial to ensure proper routing of packets.

- One must carefully plan the IP addressing scheme to avoid conflicts and ensure proper communication between clients and backend servers. We usually configure backend servers to have IP addresses similar to the virtual IP (VIP) used by the L4xNAT farm, but the backends may not announce it in ARP calls to avoid conflicts.

Why Use DSR For Your Network Infrastructure #

DSR has become extremely important in today’s network infrastructure because of its ability to handle massive amounts of data without causing major bottlenecks. This, here, is a big deal. Besides scalability, versatility, high availability, and fault tolerance, the main reasons why DSR stands out of is because of:

Turbocharged Performance: By eliminating the additional hops introduced by traditional routing methods, DSR significantly reduces latency and packet loss. We could use this setup in gaming and video streaming, where the efficient delivery of substantial data chunks is crucial.

For instance, in multiplayer games, DSR enables direct communication between game clients and game servers without the load balancer mediating every data packet. This direct communication allows faster and more efficient transmission of game-related data, such as player movements, actions, and updates. As a result, DSR reduces latency, enhances the gaming experience, and contributes to smoother gameplay.

Similarly, in video streaming, when a client requests a video stream, the backend servers can directly transmit the video data to the client without routing it through the load balancer. By removing the load balancer in the data path, we minimize potential bottlenecks, ensuring a seamless streaming experience for viewers. This is particularly beneficial for high-quality or high-resolution video content, where efficient handling of large data chunks is essential for uninterrupted playback.

Reduced Load on the Load Balancer: With DSR, we relieve the load balancer handling return traffic from the backend server. This offloading significantly reduces the processing burden on the load balancer, allowing it to focus on efficiently distributing incoming requests. As a result, the load balancer will handle a higher volume of traffic and achieve better overall scalability.

No need to maintain a routing table: Routing can be complex, especially in large-scale networks with multiple subnets and intricate routing policies. By not maintaining a routing table for return traffic, the load balancer avoids the need to handle and manage complex routing configurations, reducing the chances of misconfigurations or routing-related issues.

Relianoid Configurations for Linux and Windows backend servers #

To enable DSR, first, you must configure a layer 4 virtual server or an L4xNAT farm. Read this article to create one.

Requirements for DSR: #

- The virtual IP and backends must be in the same network.

- The Virtual Port and the Backend Ports must be the same.

- One must configure the backends loopback interfaces with the same IP address as the VIP configured in the load balancer and disable ARP in this interface.

Linux backend servers #

# ifconfig lo:0 192.168.0.99 netmask 255.255.255.255 -arp up

With this command, we create a virtual network interface lo:0 with the IP address 192.168.0.99 and a subnet mask of 255.255.255.255.

The -arp flag disables Address Resolution Protocol (ARP) on this interface.

Disabling invalid ARP responses in the backend. #

# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

This command sets the value of arp_ignore to 1 in the /proc/sys/net/ipv4/conf/all file. This parameter determines how the kernel responds to ARP requests. Setting it to 1 means the system should ignore ARP requests for IP addresses not configured on the network interface.

# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

This command modifies the arp_announce parameter on the backend servers. In DSR configurations, setting arp_announce to 2 ensures that when the backend servers respond to ARP requests, they use the destination IP address of the request as the source IP address in the ARP reply. This maintains proper communication between the backend servers and the client, as the client expects to receive the response from the IP address it sent the request to.

Windows backend servers #

- Start->Settings->Control Panel->Network and Dial-up Connections.

- Right-click on your network adapter and click Properties.

- Only Internet Protocol must be selected (Unselect “Client for MS Networks” and “File and Printer sharing”)

- TCP/IP Properties-> Enter the IP address of the VIP in Relianoid ADC farm. The default gateway is optional. Enter the mask 255.255.255.255

- Set Interface Metric to 254. This configuration is required to stop replying to any ARP response to the VIP

- Press OK and save the changes.

After, configure the Host security model to accept traffic from Relianoid ADC on the NIC interface. Additionally, allow Relianoid ADC to send and receive traffic through the default NIC interface. Open CMD as an administrator and execute the three provided commands.

netsh interface ipv4 set interface NIC weakhostreceive=enabled netsh interface ipv4 set interface loopback weakhostreceive=enabled netsh interface ipv4 set interface loopback weakhostsend=enabled

Important Note

Change the NIC and loop back to the default Interface Names of your Windows computer.

Challenges of Using DSR #

While Direct Server Return (DSR) offers numerous benefits, it may sometimes present potential challenges that organizations need to consider and address. Understanding these challenges will help plan and implement DSR effectively. Here are some common challenges associated with DSR:

Asymmetric Routing: This means the forward and return paths take different routes. While this may have merits, asymmetric routing can complicate network troubleshooting and monitoring since the traffic flow is not symmetrical.

Server Compatibility: Not all servers will support DSR with all types of applications. For example, we may only perform DSR with Linux or Windows servers when using Relianoid.

Stateful Operations: For stateful operations that rely on maintaining session information, DSR can pose challenges. When using Other NAT Types, load balancers handle all forms of session persistence, but with DSR, the direct routing bypasses these intermediaries. One way to circumvent this is to use Source Ip Address at layer 4 and Cookie Insert at layer 7 for session persistence.

Network Visibility and Monitoring: DSR can impact network visibility and monitoring since traffic bypasses load balancers or reverse proxies. Monitoring tools and systems that rely on traffic inspection or interception at these intermediaries may not capture the complete picture of network traffic. Organizations may implement alternative monitoring solutions to ensure visibility into the traffic flowing through DSR paths.

Deployment Complexity: Implementing DSR may introduce additional complexity during deployment and configuration. Proper planning, design, and testing are crucial to ensure a smooth implementation. For example, you may need to set each backend server to perform SSL offloading and logging.

Security Considerations: DSR can introduce security challenges, especially when traffic directly bypasses security measures implemented at load balancers. Sometimes you may need to alter the details of response headers, which is impossible with DSR setups.

By proactively addressing these challenges, organizations can successfully implement DSR and leverage its benefits while minimizing potential drawbacks.

Conclusion #

Direct Server Return (DSR) presents a captivating load-balancing approach with the potential to enrich your infrastructure with significant advantages. By offloading the return traffic from backend servers and allowing them to send responses directly to clients, DSR reduces the load on the load balancer and improves overall system scalability.

Another advantage could be lower network latency as responses take a more direct path to clients, bypassing the load balancer. This can be particularly advantageous for latency-sensitive applications, ensuring faster delivery of content and improved user experience.

However, carefully evaluate the specific requirements of your network architecture and application needs before implementing DSR. Consider factors such as network topology, routing protocols, the need for session persistence, and potential associated challenges.

By leveraging DSR’s benefits, you can optimize your infrastructure to handle increasing traffic loads and deliver a seamless user experience.